Built architectural interiors are full of physically sourced empirical data that we cannot see, such as temperature, humidity, electromagnetic radiation, and more. These environmental parameters are influenced by a plethora of internal and external conditions such as geometry, materials, and human activities. In return, this data affects our well-being, emotions, and functioning. How can we harness these informational flows in service of architectural design?

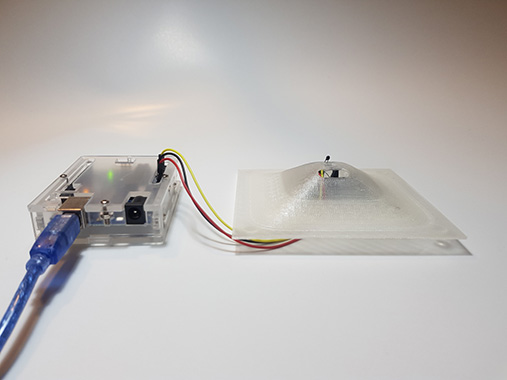

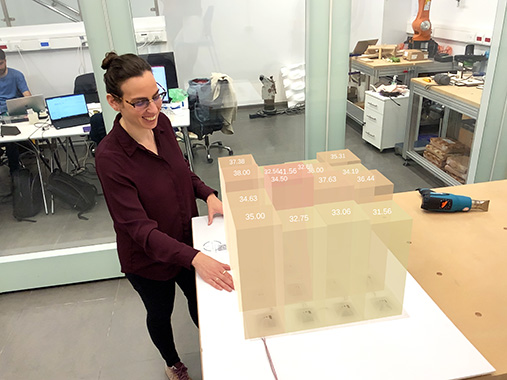

Physical computing systems connect the built physical world to the computer’s virtual space and enable information exchange between sensors and motors. However, studies stress the importance of visualizing and representing sensor data in real time and space to the built environment architectural exploration. While existing parametric software is compatible with the real-time visualization process, the computer screen diminishes the contextual relationships between the physical space and the visualization. In this respect, augmented reality technology appears to provide an ideal interface. Augmented reality (AR) is an emerging technology that superimposes the physical and the virtual environments into a single scenery, using special goggles or even a mobile phone. It enables the representation of digital information in actual size, in-situ, and in real time.

Despite the compatibility and design potentials inherent in connecting the two technologies– physical computing-based sensors and augmented reality— with the existing architectural design tools, this integration remains unexplored; and current research in this domain is limited. We argue that connecting the two technologies with existing architectural parametric design and visualization tools adds a new layer of perception to the existing architectural space, which can assist architects and designers in various phases of the design process.

To examine this, we chose the research method of prototyping, anchored in the methodology of research-through-design. The research questions aimed to explore the different articulations, potentials, and conceptual frameworks of coupling architectural computation tools with physical computing-based sensors and augmented reality: What modes of interaction between physical and virtual realities are triggered by conjoining both technologies? And what design possibilities do these new modes bear?

Throughout the thesis, we have demonstrated that coupling architectural computation tools with physical computing-based sensors and augmented reality fosters a cybernetic communication channel between the physical and virtual realities towards a new mode of perception of the architectural environment. This connection provides the opportunity to observe the built environment through its informational conditions, which are anchored in a design-visualization process. This, in turn, enables the active exploration of the environment in real time and within context towards possible design activity inherently associated with

sustainable approaches.